Hand Pose classification

Published:

Project Overview

Approach

Method I: Deep Learning-Based Classification

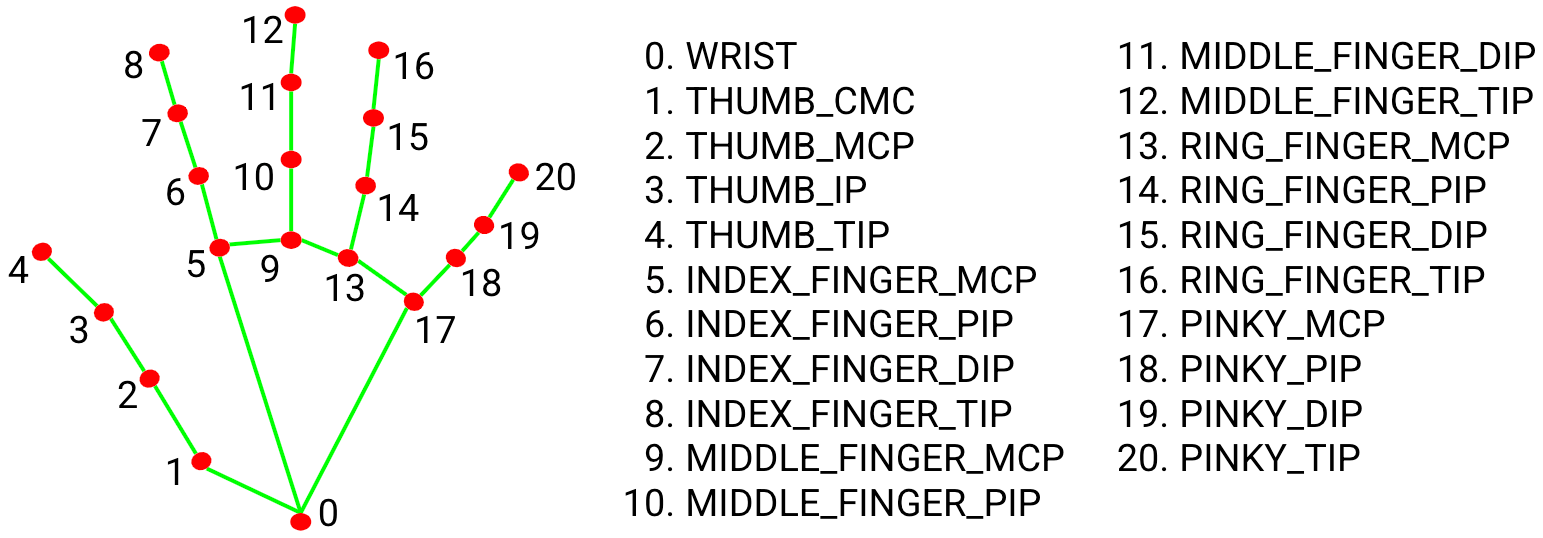

The first approach leverages deep learning to classify hand poses based on the extracted landmarks from the images. This method utilizes the Python solution API of Mediapipe, which provides a reliable way to extract 3D landmarks from hand gestures.

Key Steps

- Landmark Extraction: Use Mediapipe’s solvers (e.g., hand_landmarks) to detect key points on the hands, including finger tips and joints.

- Feature Engineering: Generate meaningful features based on the positions of these landmarks.

- Model Training: Train a deep learning model (e.g., CNN or RNN) to classify the gestures using TensorFlow.

- Input Pipeline: Utilize tf.data.Dataset for efficient data loading and preprocessing.

Video Demo

Method II: Palm Zone Analysis

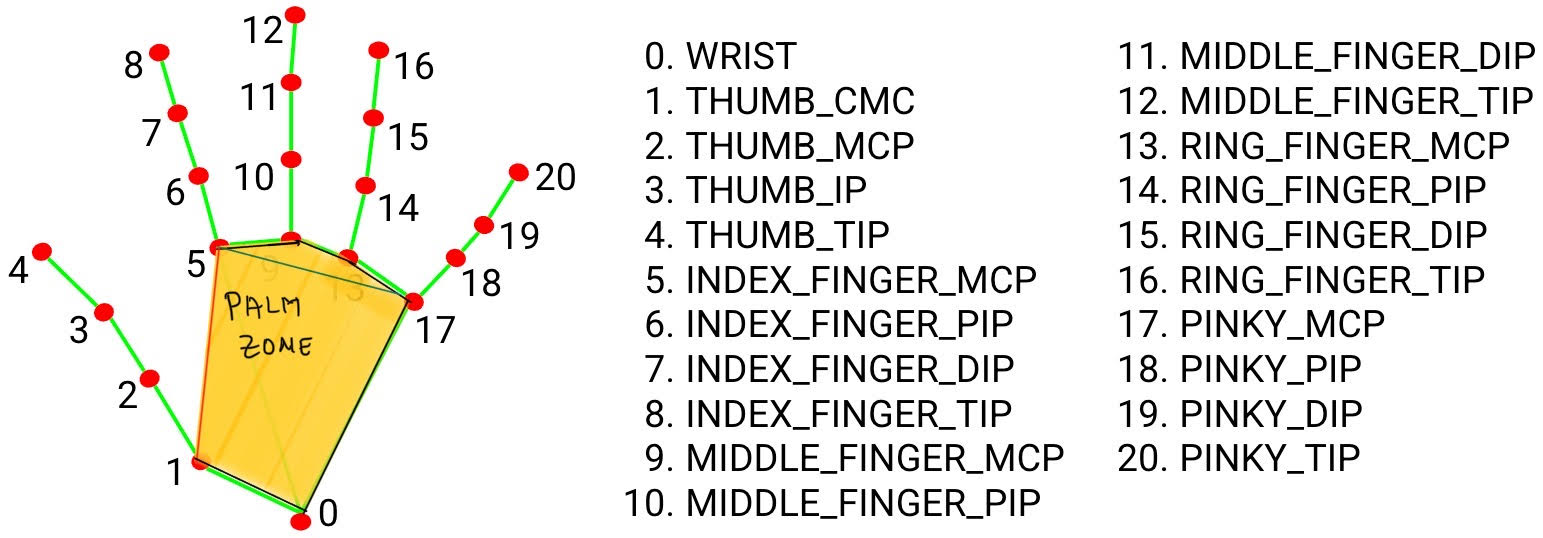

The second approach focuses on analyzing the spatial relationships between key landmarks to infer hand poses without relying on deep learning models. This method is particularly useful as a baseline for comparison with more complex models.

Key Steps

- Palm Zone Definition: Define a palm zone by drawing horizontal and vertical lines based on landmark positions (e.g., between the index finger’s MCP and pinky’s MCP for the horizontal line, and between the thumb’s CMC and index finger’s MCP for the vertical line).

- Finger Tip Classification: Identify which fingers are within the palm zone.

- Pose Classification: Use a predefined set of rules to map the presence of fingers in the palm zone to specific gestures.

Dataset Collection

The dataset is sourced from the Dataset The images are captured using near-infrared cameras, ensuring consistent and reliable hand pose capturing. For simplicity, we focus on a subset of gestures: C, Five, Four, Hang, Heavy, L, OK, Palm, Three, Two.

Data Preprocessing

- Download and Organize: Transfer the dataset to a centralized directory structure.

- Annotate Landmarks: Use Mediapipe to extract landmarks from each image.

- Save Annotations: Store the landmarks, gesture labels, and file names in CSV files for later use.

Clone the repository

git clone https://github.com/sher-somas/Hand-pose-classification.git

Input Pipeline

The pipeline includes:

- Image Reading: Load images from the dataset directory.

- Landmark Extraction: Use Mediapipe to detect landmarks.

- Feature Normalization: Normalize the landmark coordinates.

Training Structure

The training framework is modular and scalable, with the following components:

- Configuration: All configuration parameters (e.g., model architecture, training flags) are managed in src/train.py.

- Model Architecture: Customizable models are implemented in src/models.py, allowing for easy experimentation with different architectures.

- Training Options: The system supports both live webcam testing and batch testing using the test set.

Dataset creation and input pipeline

In this section,

- Downloading the data.

Creating dataset with hand landmarks.

python3 create_data.py --gesture_folder < > --save_dir < > --save_image < > --name_csv < >- gesture_folder –> name of directory containing folders of gestures.

- save_dir -> name of directory to save annotated images if –save_images is True

- save_images -> flag to save annotated images or not.

- name_csv -> save name of the csv file containing palm landmarks

Creating dataset with hand landmarks

loop:

- for image in the gesture_dir:

- run mediapipe on the image.

- get the landmarks.

- save the landmarks, gesture label, file_name to a csv file.

usage:

python3 create_data.py --gesture_folder --save_dir --save_images --name_csv

- gesture_folder –> name of directory containing folders of gestures.

- save_dir –> name of directory to save annotated images if –save_images is True

- save_images –> flag to save annotated images or not.

- name_csv –> name of the csv file containing the hand landmarks.

This runs mediapipe on the folders and creates a csv file contianing the landmarks and the label.

Creating input pipeline

- The file at src/data.py is pretty self-explanatory for this step.

Training structure

- src/train.py contains all the configuration requirements to train the model.

- src/models.py contains the model architectures. Add your models here.

- src/live_test.py can test the model on live webcam feed

Inference

A csv file is located in examples/test.csv which contains the ground truth of the pose along with filenames and the landmarks.

To run inference on webcam, provide the model directory:

python3 live_test.py --model_dir

Method II Implementation

Key Insights

Palm Zone Analysis offers a lightweight solution with minimal computational overhead (~5ms in most cases).

Advantages: No need for deep learning model training, and the method serves as a solid baseline for comparison.

Disadvantages: Requires manual coding of finger tip positions and may struggle with nuanced gestures like “Five” and “Palm”.

Algorithmic Steps

Horizontal Line Check: Verify if the index finger’s MCP (5) is below the horizontal line drawn between the pinky’s MCP (17).

Vertical Line Check: Determine whether the thumb’s CMC (1) is to the left or right of the vertical line.

Gesture Classification: Map the combinations of these checks to specific gestures using a predefined mapping.

List of gestures we I have trained on

| Gesture | Fingers in palm zone |

|---|---|

| L | middle, ring , pinky |

| OK | index, thumb tip |

| PALM | None |

| TWO | ring, pinky ,thumb |

| THREE | pinky, thumb |

| FOUR | thumb |

| FIVE | None |

| HANG | middle, ring, index |

| HEAVY | middle, ring |

If you need any explanations, please feel free to contact me at shreyas0906@gmail.com